- Home

- Online Assessment

- A.I and Assessment

- Question-Based Testing and A.I.

Question-Based Testing and A.I.

Testing through questions or examinations, such as Multiple Choice Questions (MCQ’s), as an assessment method is a useful approach to ensure competencies and application of knowledge within your subject area. It can be given in multiple formats and supports a wide variety of question styles and types to test a variety of skills from the student. However, artificial intelligence has been raised as a concern among the academic community and it’s potential impact on question test based assessments. We have curated some recommendations that can help minimise the impact of A.I. on question based tests.

Variety of question types

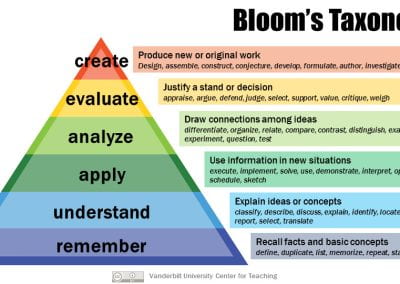

When setting a question based test, you will want to test a variety of skill sets which are appropriate for the level of study and expected learning outcomes. It’s recommended that each test contains a variety of assessment question types and levels. It can be useful to think of Blooms’ taxonomies pyramid of cognitive skills (pictured to the right), which shows a mixture of lower and higher order thinking skills, all which have their place within assessments. We have placed a list of suggested question types below, however, most question types can be used with multiple levels of study. By using a variety of question types and skill levels, you can help minimise the impact of A.I. when testing.

Lower Order Thinking Skills (LOTS)

Remember, Understand, Apply

Suitable questions types:

- Multiple-choice questions.

- True/False.

- Matching.

- Fill in the blanks.

- Picture based MCQ’s

Higher order thinking skills (HOTS)

Analyse, Evaluate and Create

Suitable questions types:

- Short form answers.

- Essay responses.

- Assertion based questions

- Multiple Choice Questions *

- Hypothetical scenario

- Reflective questions

*e.g. case based questions to test higher order thinking skills for complex ideas/concepts

Multiple choice questions can vary in the amount of answers available to students, most MCQ’s vary between 3-5 answers being available. It can be useful to use ‘distractor’ answers within your list of choices i.e. answers that are a plausible alternative to the correct answer but require a deeper understanding from the student to decode. A.I. can struggle to interpret the differences between these types of answers and this can be especially useful when the questions involved interpreting data or scenarios where the answer is used to apply and explain rather than just remember.

Multi-part questions, or questions that are interlinked, often result in the A.I. being unable to answer. This is due to the A.I. only being able to read information from the current question as it does not see the information as being connected. By separating a question up into key segments of information it:

1. Can help students segment and focus on the current question.

2. does not provide data for the A.I. to read in order to provide a full answer.

However, clear instructional language for multiple parts need to be considered as key signposts will need to be given to the students to ensure they understand where they are connecting data to and from.

Multiple choice questions can be useful to gauge basic knowledge and understanding and is suitable for a lower order thinking skills questions. However, MCQ’s can be used as part of an application or scenario based approach instead which tests higher skill sets of application and analysis. I.e. outlining a case study and asking students what different theoretical/practical approaches they would choose and justify their reasoning. This enables students to apply their own knowledge, synthesise information and understand that information within a different context can make it harder for an A.I. to interpret as limited wider information is available to read from.

Data sets can be used to tests students application of knowledge as this will vary the data that is entered and will ensure a wider set of answers are available. There are three different recommendations for ways of varying data sets:

- Use the built in formula design tools to give variances of answers within a set limit. This will mean that each question set will vary for each student. Multiple data sets can be prepped ahead and marked automatically by the testing tool.

- Use an external link, outside of the test but still accessible, to a data set which the A.I. can’t access

- Use a simulation model to generate several key data sets and randomly assign a data set to each student.

Randomising the data set can help minimise A.I.’s impact, but it can often be useful to pair varying data sets with scenario based questions or reflective feedback so students can demonstrate their wider understanding. One example of this is giving the student a scenario where the answer is wrong and getting the student explain why this is the case. This can be done via MCQ’s or short form essay replies and demonstrates a deeper understanding of the approach.

Short and long form answers are useful to identify wider understanding and application of knowledge. However, if the question is based around remembering structures or processes e.g. what five things would you look for with x… this can easily be interpreted by an A.I. Instead it can be useful to use short/long form questions which are related directly either to the students experience and reflections to make it more personalised. This style of questions can also be used for comparing and contrasting key theories and forming opinions, therefore testing higher order thinking skills.

When creating questions, and looking at blooms taxonomy, we know that every level of question has it’s place within testing. However, some basic recall questions can easily be changed so that instead of recall they focus on application and evaluation of knowledge. This can be done through the ‘flip the question’ approach.

For example this involves the following steps:

- Write a simple knowledge fact checking style question.

- Flip the question around by giving the student that fact and asking them to explain this in context.

Below we have two examples. The first looks at up-leveling a remember question to an understand based question. The second looks at an apply based question moving into evaluation.

| Example question 1 (Remember > Understand) | Example question 2 (Apply > Apply & Evaluate) |

| Standard: What was the name of experimenters who created the [approach] test? | Standard: What theory of learning is subject A demonstrating and what recommendations would you suggest for future treatment? |

| Flipped variant: [Experimenters names] who create the [approach] test were able to show that:… | Flipped variant: The medical professional in this case study has applied [insert treatment] for subject A. Do you agree with their approach and why? |

This approach can help students access the higher order thinking skills and looks at justification and comparative ideas. Questions which are designed beyond basic recall and require further reading can minimise A.I.’s impact on test answers.

References

Armstrong, P. (2010). Bloom’s Taxonomy. Vanderbilt University Center for Teaching. Web | Blooms taxonomy pyramid | External